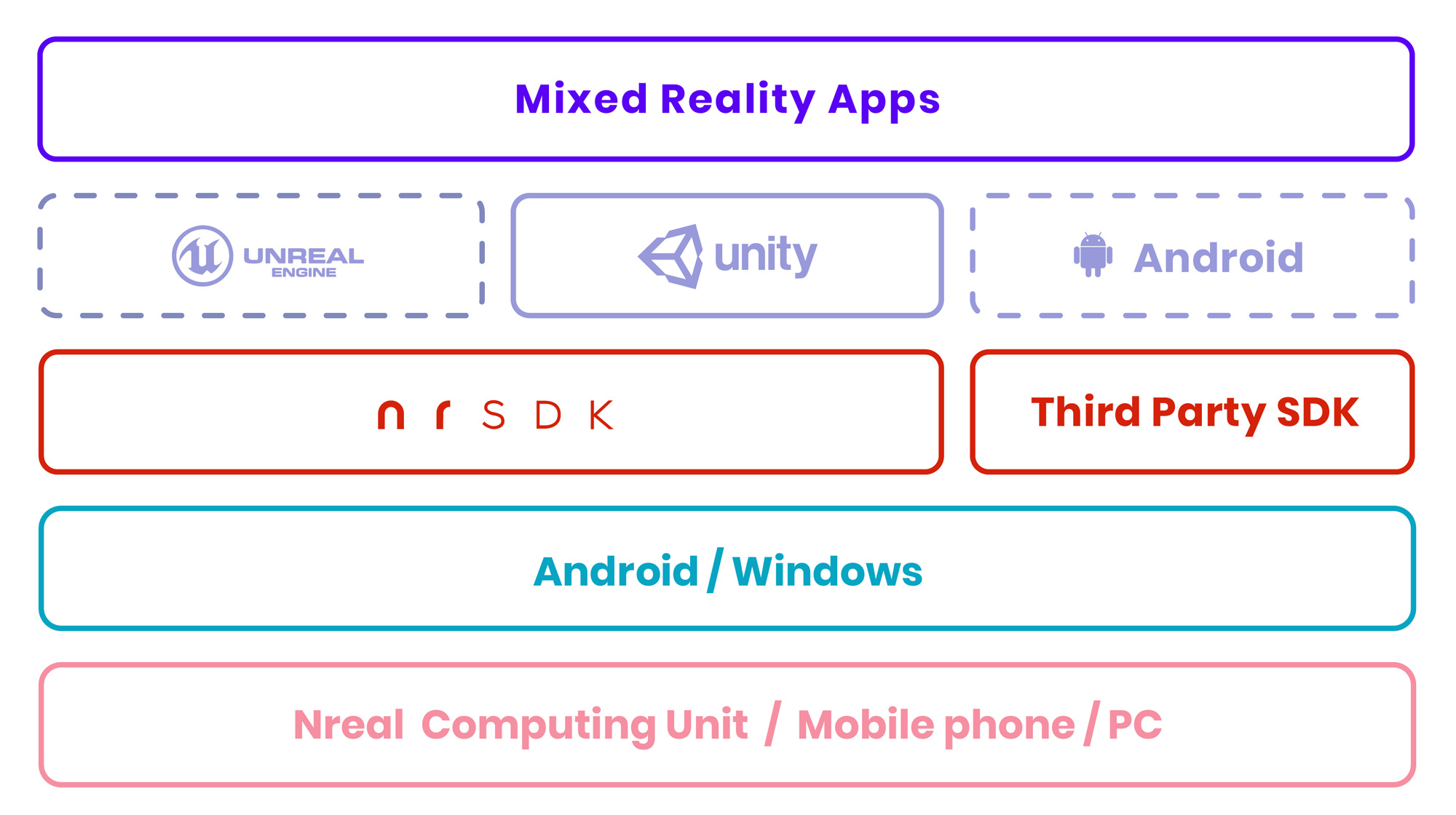

Introducing NRSDK

NRSDK is Nreal’s platform for developing mixed reality experiences. Using a simple development process and a high-level API, NRSDK provides a set of powerful MR features and enables your Nreal glasses to understand the real world.

NRSDK supports the development environment of Unity 2018.2.X and above.

The core features provided by NRSDK are spatial Computing, Optimized Rendering, and Multi-modal Interactions.

Spatial Computing include motion tracking, plane tracking, image tracking, hand tracking, allowing the glasses to track their real-time position relative to the world and understand the environment around them.

Optimized Rendering is automatically applied to the applications and runs in the backend to minimize latency and reduce judder, enhancing the overall user experience.

Multi-modal Interactions provide intuitive choices of interaction for different use cases.

Developer Tools are provided so you can better develop and debug apps.

Spatial Computing

6DoF Tracking technology uses the two SLAM cameras located on both sides of the Nreal glasses to identify feature points, tracking how these points move over time. Combining the movement of these points with readings from the glasses’ IMU sensors, NRSDK accurately tracks both the position and orientation of the glasses as it moves through space. 6DoF tracking also provides developers with real-time mapping constructions and 3D point clouds, giving the applications information on the physical structures of the environment.

Plane Detection enables Nreal glasses to detect flat surfaces (both horizontal and vertical) in the environment, such as a table or a wall (in NRSDK 1.0.0 beta, only horizontal plane detection is available). The transformation of the plane is continuously updated. When the glasses move around, the plane can be extended, and multiple planes can merge into one when they overlap.

Image Tracking allows apps to recognize physical images and build augmented reality experiences around them. By adding multiple reference images to the image database, more than one pre-trained image can be detected in a session.

Hand Tracking capability tracks the position of key points of your hands and recognizes hand poses in real-time. Hand poses are shown in the first-person view and used to interact with virtual objects immersively in-world.

Optimized Rendering

NRSDK optimizes the rendering performance in the backend to minimize the latency of the entire system and to reduce judder. It provides a smooth and comfortable user experience devoid of dizziness and sickness. You do not need to enable or tune rendering features as they are automatically applied.

Warping Instead of polling tracking data at the very beginning of each frame to render the image, NRSDK uses the last predicted position of the Nreal glasses to warp the rendered image, reproject, and send to display before every VSync.

Multi-modal Interactions

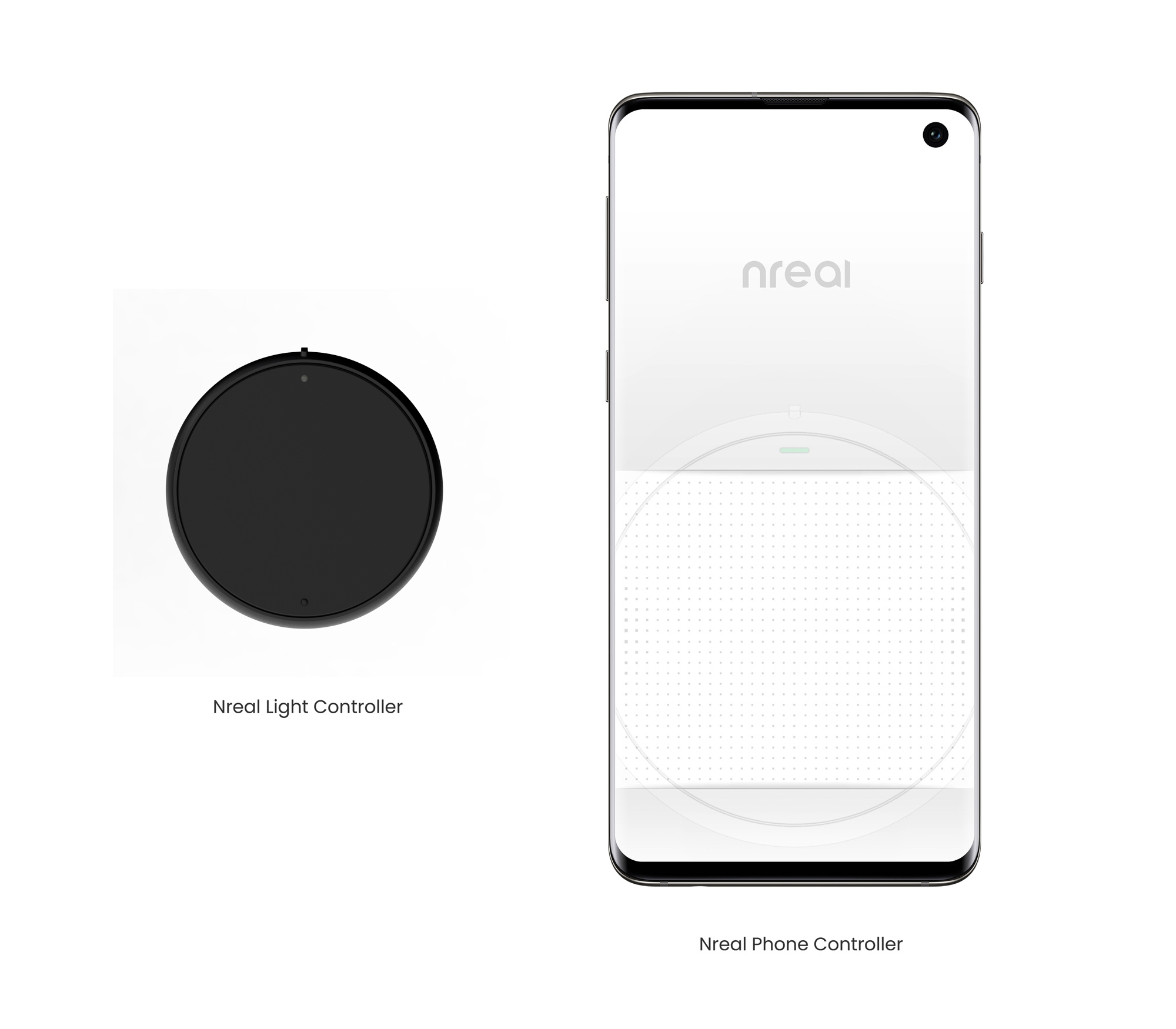

Nreal Light Controller (3DoF): A light 3DoF controller to interact with the virtual objects in a natural and intuitive way.

Nreal Phone Controller (3DoF): An Android mobile phone can serve as a 3DoF controller when connected to Nreal glasses. The touchscreen supports gestures and all interactions mirror interactions on the Nreal controller.

For more interaction models, please refer to the Design Guide .

Developer Tools

Developer Tools, such as Emulator Testing Tool and First Person View, are something we designed especially for you.

The Emulator Testing Tool is like an emulator running under the Unity Editor play mode. Inputs that would usually be read by the sensors on Nreal glasses are instead simulated using the keyboard, mouse, or smartphone. It comes with NRSDK and allows you to test applications without having real Nreal devices.

First Person View allows you to share your creations with others by allowing them to see a Nreal Light user’s world on a 2D phone or tablet screen.

Others

NRSDK is designed as an open platform and can be integrated with third-party SDKs as plug-ins. For example, data from the RGB camera is available for third-party SDKs to run face detection algorithms.